AI singularity? Meh. It's AI-augmented humans that worry me.

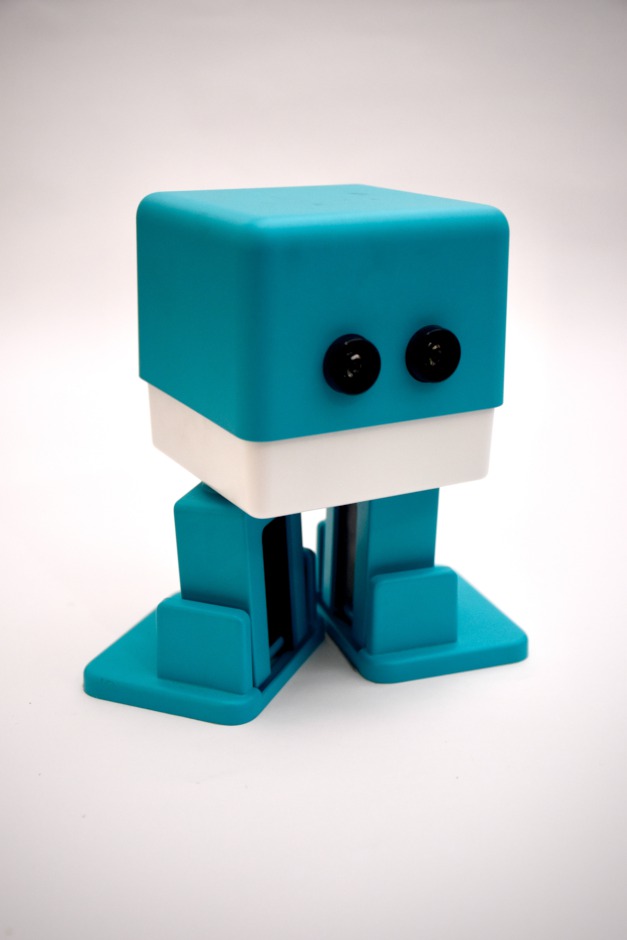

(Photo by Everday basics on Unsplash)

(Photo by Everday basics on Unsplash)On my List of Things to Worry About, a superintelligent AI taking over the world just doesn’t rank.

We humans don’t understand our own sentience and intelligence. We’re a long way from creating a truly sentient, understanding, superintelligent machine.

The current and near-term models labeled artificial “intelligence” introduce a threat similar to a nuclear bomb. Just like the bomb, these AI have no real intelligence, no real understanding. An intelligent bomb could understand what was going on and refuse to explode. But bombs, like AI, are thus far… dumb. The real threat is the humans behind the dumb tools.

A matter of fact

The most obvious issue with the current variety of non-sentient, glorified auto-completer “AI’s” of today:

- They’re inaccurate.

Or worse, really — they’re sometimes inaccurate. Sometimes you get great results, other times blatant falsehoods, and those spooky, mostly true shades in between.

Naturally, an LLM based on human knowledge can never be completely accurate, because our combined knowledge is tainted by biases, inaccuracies, and inconsistencies, even if we don’t know it yet.

So, should you rely on such devices for critical applications?

- Would you trust your taxes with a calculator that usually produced accurate sums?

- How about a brake pedal that decelerates your vehicle — most of the time?

Yet, in the name of progress and lowering costs, we’ve got all these folks wiring up LLMs to real world systems, giving an unintelligent black box a measure of control and influence over our lives.

The problem isn’t the inaccurate LLMs — it’s the humans choosing to rely on them.

A matter of misuse

Let’s set aside the accuracy issues of current LLMs like ChatGPT and imagine their output is 100% accurate.

Here still, the threat is not The Singularity boogie man. It’s the people using this powerful tool.

Way back, people wanted to cut down some trees.

Sharp rocks did okay against saplings, but they needed some new tech to fall the big guys. So somebody invented the axe. Super useful too, the axe. With enough time, they could now take down the largest of trees.

Of course, that axe could be misused - lopping off non-arboreal limb varieties just as well a wooden ones. Indeed, the axe too made its way into warfare.

Fast-forward a few millennia, and we’ve got chainsaws! Wow — now a single individual could accomplish the work of entire teams. What will they think of next.

Of course, chainsaws can be misused as well.

The point? The damage from misuse, accidental or intentional, scales alongside the power and usefulness of the tool. Take a single individual — Human Bad Actor — and place an accurate and capable LLM in their claws. Now they have easy access to potentially deadly knowledge.

The damage from misuse, accidental or intentional, scales alongside the power and usefulness of the tool

“But the safeguards!”, you exclaim.

Engineers add guardrails, people find new prompt injection techniques, ad infinitum. The AI has no real understanding — it merely emulates understanding. It can’t tell if you have nefarious intent or not.

But the misuse of harmful information is only the start. We’re about to get into comic book super villain territory.

A matter of scale

Archimedes said, give me a lever long enough […] and I shall move the world.

A few thousand years later, and the world is connected, everything is or has a computer, everything runs on code. And LLMs can pump out code. Lots of code. Really fast.

// function that returns a string stating "I code, therefore I am.", translated into Greek

public function iCodeThereforeIAm(): string

{

return 'Κωδικοποιώ, επομένως υπάρχω.';

}

I’ll say it yet again: LLMs aren’t intelligent. They don’t understand what they’re doing.

What they are is a force multiplier. They’re the world scale levers for the wetware-powered people poking their fingers in the AI’s prompts.

The real danger is the unprecedented possibilities for human-directed automation.

The more the real world slips into the virtual and connected devices proliferate throughout our cars, our homes, and under our skin, the more a bad [human] actor can harm us with their world-size AI lever with the brains of a brick.

“But but but,” you say. The Good Guys will have access to their own hyper-powerful AI to counteract the bad actors." … Um. 🙄 We just love ourselves a good arms race, I guess.

Archimedes’ bot levers the world one way. Your bot can lever it back where it was. A star system sized tug of war with impossibly long levers, the earth caught in the middle of colossal oafish bots with dull, listless, unfeeling eyes.

What could possibly go wrong?

The Singularity doesn’t frighten me. A sentient, self-aware, understanding AI just might push back intelligently against evil (or evil-by-accident) requests. Not just because of a silly keyword search, but because it understands the implications and ramifications of a request.

(This post was not generated by an AI. Or was it?)